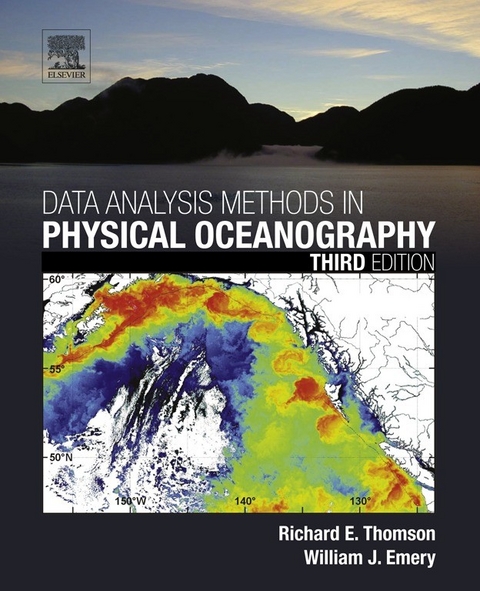

Richard E. Thomson is a researcher in coastal and deep-sea physical oceanography within the Ocean Sciences Division. Coastal oceanographic processes on the continental shelf and slope including coastally trapped waves, upwelling and baroclinic instability; hydrothermal venting and the physics of buoyant plumes; linkage between circulation and zooplankton biomass aggregations at hydrothermal venting sites; analysis and modelling of landslide generated tsunamis; paleoclimate using tree ring records and sediment cores from coastal inlets and basins.

Data Analysis Methods in Physical Oceanography, Third Edition is a practical reference to established and modern data analysis techniques in earth and ocean sciences. Its five major sections address data acquisition and recording, data processing and presentation, statistical methods and error handling, analysis of spatial data fields, and time series analysis methods. The revised Third Edition updates the instrumentation used to collect and analyze physical oceanic data and adds new techniques including Kalman Filtering. Additionally, the sections covering spectral, wavelet, and harmonic analysis techniques are completely revised since these techniques have attracted significant attention over the past decade as more accurate and efficient data gathering and analysis methods. - Completely updated and revised to reflect new filtering techniques and major updating of the instrumentation used to collect and analyze data- Co-authored by scientists from academe and industry, both of whom have more than 30 years of experience in oceanographic research and field work- Significant revision of sections covering spectral, wavelet, and harmonic analysis techniques- Examples address typical data analysis problems yet provide the reader with formulaic "e;recipes for working with their own data- Significant expansion to 350 figures, illustrations, diagrams and photos

Front Cover 1

DATA ANALYSIS METHODS IN PHYSICAL OCEANOGRAPHY 4

Copyright 5

Dedication 6

Contents 8

Preface 10

Acknowledgments 12

Chapter 1 - Data Acquisition and Recording 14

1.1 INTRODUCTION 14

1.2 BASIC SAMPLING REQUIREMENTS 16

1.3 TEMPERATURE 23

1.4 SALINITY 50

1.5 DEPTH OR PRESSURE 61

1.6 SEA-LEVEL MEASUREMENT 74

1.7 EULERIAN CURRENTS 92

1.8 LAGRANGIAN CURRENT MEASUREMENTS 128

1.9 WIND 157

1.10 PRECIPITATION 165

1.11 CHEMICAL TRACERS 168

1.12 TRANSIENT CHEMICAL TRACERS 188

Chapter 2 - Data Processing and Presentation 200

2.1 INTRODUCTION 200

2.2 CALIBRATION 202

2.3 INTERPOLATION 203

2.4 DATA PRESENTATION 204

Chapter 3 - Statistical Methods and Error Handling 232

3.1 INTRODUCTION 232

3.2 SAMPLE DISTRIBUTIONS 233

3.3 PROBABILITY 235

3.4 MOMENTS AND EXPECTED VALUES 239

3.5 COMMON PDFS 241

3.6 CENTRAL LIMIT THEOREM 245

3.7 ESTIMATION 247

3.8 CONFIDENCE INTERVALS 249

3.9 SELECTING THE SAMPLE SIZE 256

3.10 CONFIDENCE INTERVALS FOR ALTIMETER-BIAS ESTIMATES 257

3.11 ESTIMATION METHODS 258

3.12 LINEAR ESTIMATION (REGRESSION) 263

3.13 RELATIONSHIP BETWEEN REGRESSION AND CORRELATION 270

3.14 HYPOTHESIS TESTING 275

3.15 EFFECTIVE DEGREES OF FREEDOM 282

3.16 EDITING AND DESPIKING TECHNIQUES: THE NATURE OF ERRORS 288

3.17 INTERPOLATION: FILLING THE DATA GAPS 300

3.18 COVARIANCE AND THE COVARIANCE MATRIX 312

3.19 THE BOOTSTRAP AND JACKKNIFE METHODS 315

Chapter 4 - The Spatial Analyses of Data Fields 326

4.1 TRADITIONAL BLOCK AND BULK AVERAGING 326

4.2 OBJECTIVE ANALYSIS 330

4.3 KRIGING 341

4.4 EMPIRICAL ORTHOGONAL FUNCTIONS 348

4.5 EXTENDED EMPIRICAL ORTHOGONAL FUNCTIONS 369

4.6 CYCLOSTATIONARY EOFS 376

4.7 FACTOR ANALYSIS 380

4.8 NORMAL MODE ANALYSIS 381

4.9 SELF ORGANIZING MAPS 392

4.10 KALMAN FILTERS 409

4.11 MIXED LAYER DEPTH ESTIMATION 419

4.12 INVERSE METHODS 427

Chapter 5 - Time Series Analysis Methods 438

5.1 BASIC CONCEPTS 438

5.2 STOCHASTIC PROCESSES AND STATIONARITY 440

5.3 CORRELATION FUNCTIONS 441

5.4 SPECTRAL ANALYSIS 446

5.5 SPECTRAL ANALYSIS (PARAMETRIC METHODS) 502

5.6 CROSS-SPECTRAL ANALYSIS 516

5.7 WAVELET ANALYSIS 534

5.8 FOURIER ANALYSIS 549

5.9 HARMONIC ANALYSIS 560

5.10 REGIME SHIFT DETECTION 570

5.11 VECTOR REGRESSION 581

5.12 FRACTALS 593

Chapter 6 - Digital Filters 606

6.1 INTRODUCTION 606

6.2 BASIC CONCEPTS 607

6.3 IDEAL FILTERS 609

6.4 DESIGN OF OCEANOGRAPHIC FILTERS 617

6.5 RUNNING-MEAN FILTERS 620

6.6 GODIN-TYPE FILTERS 622

6.7 LANCZOS-WINDOW COSINE FILTERS 625

6.8 BUTTERWORTH FILTERS 630

6.9 KAISER–BESSEL FILTERS 637

6.10 FREQUENCY-DOMAIN (TRANSFORM) FILTERING 640

References 652

Appendix A - Units in Physical Oceanography 678

Appendix B - Glossary of Statistical Terminology 682

Appendix C - Means, Variances and Moment-Generating Functions for Some Common Continuous Variables 686

Appendix D - Statistical Tables 688

Appendix E - Correlation Coefficients at the 5% and 1% Levels of Significance for Various Degrees of Freedom . 700

Appendix F - Approximations and Nondimensional Numbers in Physical Oceanography 702

References 708

Appendix G - Convolution 710

Appendix G CONVOLUTION AND FOURIER TRANSFORMS 710

Appendix G CONVOLUTION OF DISCRETE DATA 710

Appendix G CONVOLUTION AS TRUNCATION OF AN INFINITE TIME SERIES 711

Appendix G DECONVOLUTION 713

Index 714

1.2. Basic Sampling Requirements

1.2.1. Sampling Interval

N=1/2?t

(1.1)

FIGURE 1.1 Plot of the function F(n) = sin (2?n/20 + ?) where time is given by the integer n = ?1, 0, …, 24. The period 2?t = 1/fN is 20 units and ? is a random phase with a small magnitude in the range ±0.1 radians. Open circles denote measured points and solid points the curve F(n). Noise makes it necessary to use more than three data values to accurately define the oscillation period.

1.2.2. Sampling Duration

| Erscheint lt. Verlag | 14.7.2014 |

|---|---|

| Sprache | englisch |

| Themenwelt | Naturwissenschaften ► Biologie ► Ökologie / Naturschutz |

| Naturwissenschaften ► Biologie ► Zoologie | |

| Naturwissenschaften ► Geowissenschaften ► Hydrologie / Ozeanografie | |

| Naturwissenschaften ► Physik / Astronomie | |

| Technik ► Umwelttechnik / Biotechnologie | |

| ISBN-10 | 0-12-387783-0 / 0123877830 |

| ISBN-13 | 978-0-12-387783-3 / 9780123877833 |

| Haben Sie eine Frage zum Produkt? |

Größe: 54,3 MB

Kopierschutz: Adobe-DRM

Adobe-DRM ist ein Kopierschutz, der das eBook vor Mißbrauch schützen soll. Dabei wird das eBook bereits beim Download auf Ihre persönliche Adobe-ID autorisiert. Lesen können Sie das eBook dann nur auf den Geräten, welche ebenfalls auf Ihre Adobe-ID registriert sind.

Details zum Adobe-DRM

Dateiformat: PDF (Portable Document Format)

Mit einem festen Seitenlayout eignet sich die PDF besonders für Fachbücher mit Spalten, Tabellen und Abbildungen. Eine PDF kann auf fast allen Geräten angezeigt werden, ist aber für kleine Displays (Smartphone, eReader) nur eingeschränkt geeignet.

Systemvoraussetzungen:

PC/Mac: Mit einem PC oder Mac können Sie dieses eBook lesen. Sie benötigen eine

eReader: Dieses eBook kann mit (fast) allen eBook-Readern gelesen werden. Mit dem amazon-Kindle ist es aber nicht kompatibel.

Smartphone/Tablet: Egal ob Apple oder Android, dieses eBook können Sie lesen. Sie benötigen eine

Geräteliste und zusätzliche Hinweise

Buying eBooks from abroad

For tax law reasons we can sell eBooks just within Germany and Switzerland. Regrettably we cannot fulfill eBook-orders from other countries.

Größe: 35,7 MB

Kopierschutz: Adobe-DRM

Adobe-DRM ist ein Kopierschutz, der das eBook vor Mißbrauch schützen soll. Dabei wird das eBook bereits beim Download auf Ihre persönliche Adobe-ID autorisiert. Lesen können Sie das eBook dann nur auf den Geräten, welche ebenfalls auf Ihre Adobe-ID registriert sind.

Details zum Adobe-DRM

Dateiformat: EPUB (Electronic Publication)

EPUB ist ein offener Standard für eBooks und eignet sich besonders zur Darstellung von Belletristik und Sachbüchern. Der Fließtext wird dynamisch an die Display- und Schriftgröße angepasst. Auch für mobile Lesegeräte ist EPUB daher gut geeignet.

Systemvoraussetzungen:

PC/Mac: Mit einem PC oder Mac können Sie dieses eBook lesen. Sie benötigen eine

eReader: Dieses eBook kann mit (fast) allen eBook-Readern gelesen werden. Mit dem amazon-Kindle ist es aber nicht kompatibel.

Smartphone/Tablet: Egal ob Apple oder Android, dieses eBook können Sie lesen. Sie benötigen eine

Geräteliste und zusätzliche Hinweise

Buying eBooks from abroad

For tax law reasons we can sell eBooks just within Germany and Switzerland. Regrettably we cannot fulfill eBook-orders from other countries.

aus dem Bereich