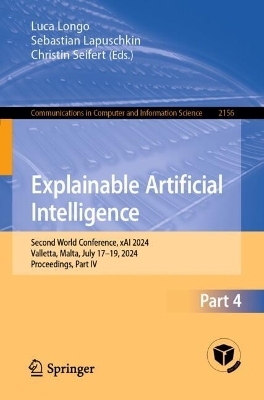

Explainable Artificial Intelligence

Springer International Publishing (Verlag)

978-3-031-63802-2 (ISBN)

- Noch nicht erschienen - erscheint am 02.08.2024

- Versandkostenfrei innerhalb Deutschlands

- Auch auf Rechnung

- Verfügbarkeit in der Filiale vor Ort prüfen

- Artikel merken

This four-volume set constitutes the refereed proceedings of the Second World Conference on Explainable Artificial Intelligence, xAI 2024, held in Valletta, Malta, during July 17-19, 2024.

The 95 full papers presented were carefully reviewed and selected from 204 submissions. The conference papers are organized in topical sections on:

Part I - intrinsically interpretable XAI and concept-based global explainability; generative explainable AI and verifiability; notion, metrics, evaluation and benchmarking for XAI.

Part II - XAI for graphs and computer vision; logic, reasoning, and rule-based explainable AI; model-agnostic and statistical methods for eXplainable AI.

Part III - counterfactual explanations and causality for eXplainable AI; fairness, trust, privacy, security, accountability and actionability in eXplainable AI.

Part IV - explainable AI in healthcare and computational neuroscience; explainable AI for improved human-computer interaction and software engineering for explainability; applications of explainable artificial intelligence.

.- Explainable AI in healthcare and computational neuroscience.

.- SRFAMap: a method for mapping integrated gradients of a CNN trained with statistical radiomic features to medical image saliency maps.

.- Transparently Predicting Therapy Compliance of Young Adults Following Ischemic Stroke.

.- Precision medicine in student health: Insights from Tsetlin Machines into chronic pain and psychological distress.

.- Evaluating Local Explainable AI Techniques for the Classification of Chest X-ray Images.

.- Feature importance to explain multimodal prediction models. A clinical use case.

.- Identifying EEG Biomarkers of Depression with Novel Explainable Deep Learning Architectures.

.- Increasing Explainability in Time Series Classification by Functional Decomposition.

.- Towards Evaluation of Explainable Artificial Intelligence in Streaming Data.

.- Quantitative Evaluation of xAI Methods for Multivariate Time Series - A Case Study for a CNN-based MI Detection Model.

.- Explainable AI for improved human-computer interaction and Software Engineering for explainability.

.- Influenciae: A library for tracing the influence back to the data-points.

.- Explainability Engineering Challenges: Connecting Explainability Levels to Run-time Explainability.

.- On the Explainability of Financial Robo-advice Systems.

.- Can I trust my anomaly detection system? A case study based on explainable AI..

.- Explanations considered harmful: The Impact of misleading Explanations on Accuracy in hybrid human-AI decision making.

.- Human emotions in AI explanations.

.- Study on the Helpfulness of Explainable Artificial Intelligence.

.- Applications of explainable artificial intelligence.

.- Pricing Risk: An XAI Analysis of Irish Car Insurance Premiums.

.- Exploring the Role of Explainable AI in the Development and Qualification of Aircraft Quality Assurance Processes: A Case Study.

.- Explainable Artificial Intelligence applied to Predictive Maintenance: Comparison of Post-hoc Explainability Techniques.

.- A comparative analysis of SHAP, LIME, ANCHORS, and DICE for interpreting a dense neural network in Credit Card Fraud Detection.

.- Application of the representative measure approach to assess the reliability of decision trees in dealing with unseen vehicle collision data.

.- Ensuring Safe Social Navigation via Explainable Probabilistic and Conformal Safety Regions.

.- Explaining AI Decisions: Towards Achieving Human-Centered Explainability in Smart Home Environments.

.- AcME-AD: Accelerated Model Explanations for Anomaly Detection.

| Erscheint lt. Verlag | 2.8.2024 |

|---|---|

| Reihe/Serie | Communications in Computer and Information Science |

| Zusatzinfo | XVII, 466 p. 149 illus., 136 illus. in color. |

| Verlagsort | Cham |

| Sprache | englisch |

| Maße | 155 x 235 mm |

| Themenwelt | Mathematik / Informatik ► Informatik ► Netzwerke |

| Informatik ► Theorie / Studium ► Künstliche Intelligenz / Robotik | |

| Schlagworte | Ante-hoc approaches for interpretability • argumentative-based approaches for explanations • Artificial Intelligence • Auto-encoders & explainability of latent spaces • Case-based explanations for AI systems • causal inference & explanations • convolutional neural networks • decomposition of neural network-based models for XAI • explainability • Explainable Artificial Intelligence • Graph neural networks for explainability • Human rights for explanations in AI systems • interpretable machine learning • Interpretable representational learning • Interpreting & explaining Convolutional Neural Networks • Model-specific vs model-agnostic methods for XAI • natural language processing for explanations • Neural networks • Neuro-symbolic reasoning for XAI • reinforcement learning for enhancing XAI |

| ISBN-10 | 3-031-63802-6 / 3031638026 |

| ISBN-13 | 978-3-031-63802-2 / 9783031638022 |

| Zustand | Neuware |

| Haben Sie eine Frage zum Produkt? |

aus dem Bereich