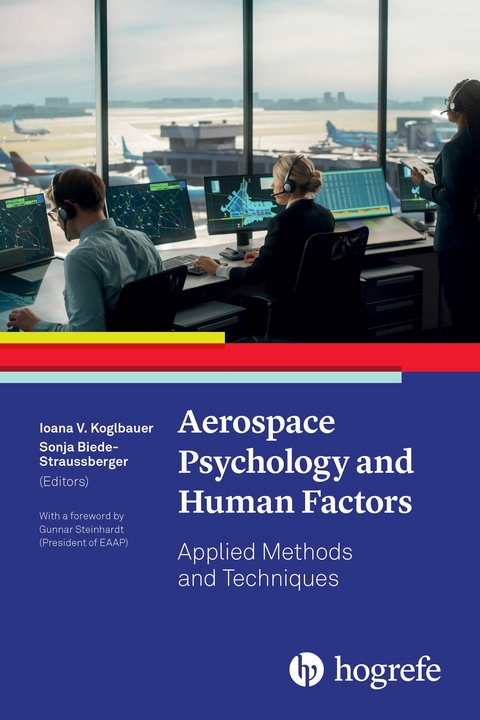

Aerospace Psychology and Human Factors (eBook)

286 Seiten

Hogrefe Publishing (Verlag)

978-1-61334-647-1 (ISBN)

|1|Chapter 1

Integrating Human Factors Into the System Design Process

Brittany Bishop, Pauline Harrington, Nancy Leveson, and Rodrigo Rose

Abstract

Hazard analysis is the basis of engineering for safety. However, in such analyses, human factors are often oversimplified as simply “human failure,” disregarding the systemic issues that lead to flawed decisions. A new, more powerful hazard analysis technique, called “system-theoretic process analysis” (STPA), combines sophisticated human factors, hardware design, software design, and even social systems in one integrated model and analysis. STPA can be used to identify conditions and events that can lead to an accident or mission loss so that designs can prevent or minimize losses. Safety assurance is typically carried out separately from system design and in later stages of development. By the time these assurance processes are used, it is often too late to effectively modify a system to address any safety issues that are found. STPA assists in overcoming these problems when used by an integrated team of engineering specialists, including human factors experts, to identify potential scenarios leading to unsafe behavior starting from the beginning of the design process.

Keywords

aviation psychology, human factors in system engineering, system safety engineering, STPA

The Goal

Hazard analysis is the foundation of engineering for safety. It is used to identify the hazards, which are defined as system states or sets of conditions that, together with a particular worst-case environment, will lead to a loss (Leveson, 2012). Once identified, this information can be used in system development and operations to eliminate these hazards or, if that is not possible, to reduce their likelihood or to minimize their potential impact. Unfortunately, the complex software-intensive systems being built today cannot be fully analyzed using traditional hazard analysis techniques. In addition, human contributions to risk have traditionally been |2|oversimplified by engineers in the hazard analysis process, thus limiting the usefulness of the hazard analysis process in reducing overall system risk.

The role of humans is changing as our systems become increasingly automated. Rather than directly controlling a potentially dangerous system, operators today are more often supervising automation and taking over in the cases where automation is not able to cope. It is no longer useful to only look at simple human mistakes in reading a dial or operating controls. The cognitively complex activities in which operators are now engaged do not lend themselves to simple failure analyses.

At the same time, some systems are designed such that a human error is inevitable, and then the loss is blamed on the human rather than on the system design (Leveson, 2019). Hazards may result from automation design that induces erroneous or dangerous operator behavior. Sometimes interface changes can alleviate these human errors, but often interface design fixes alone are not enough.

Human–machine interactions are greatly affected by the design of both the software and the hardware in concert with the design of the activities and functions provided by the operator. Changing the software, hardware, and human activities is the most direct and effective way to eliminate interaction problems as opposed to simply changing the interface between the human operator and the rest of the system. To reduce risk most effectively, the design or redesign of the functionality of the software and hardware and of the activities assigned to the operator and to the automation is needed rather than merely the design or redesign of the displays and controls.

In addition, today’s complex, highly automated systems argue for the need for integrated system analyses and design processes. In the analysis and design of complex systems, it is not enough to separate the efforts in hardware design, software design, and human factors. Successful system design can only be achieved by engineers, human factors experts, and application experts working together. Obstacles to this type of collaboration stem from limitations in training and education, the lack of common languages and models among different specialties, or an overly narrow view of one’s responsibilities. These obstacles need to be overcome to successfully build safer systems. This chapter presents an approach involving new modeling and analysis tools that will allow all the engineering specialties to use common tools and work more effectively together.

An overriding assumption in this chapter is the systems theory principle that human behavior is impacted by the design of the system in which it occurs. If we want to change operator behavior, we have to change the design of the system in which the operator is working. For example, if the design of the system is confusing the operators, (1) we can try to train the operators not to be confused, which will be of limited usefulness, (2) we can try to fix the problem by providing more or better information through the interface, or (3) we can redesign the system to be less confusing. The third approach will be the most effective.

|3|Simply telling operators to follow detailed procedures that may turn out to be wrong in special circumstances or relying on training to ensure they do what they “should” do – when that may only be apparent in hindsight – will simply guarantee that unnecessary accidents will occur. The alternative is to ask how we can design to reduce operator errors or, conversely, identify what design features induce human error. In other words, we must design to support the operator.

A New Foundation for Integrated System Analysis

Achieving this goal will require new modeling and analysis tools. Traditional hazard modeling and analysis techniques do not have the power to handle complex systems today. They are based on a very simple model of causality that assumes accidents are caused by component failures. A new model of accident causality, called the “system-theoretic accident model and process” (STAMP), comprises more complex types of causal factors, including interactions among system components and including the operators (Leveson, 2012). In this enhanced model of causality, accidents may result from unsafe interactions among components that may not have “failed.” In other words, each system component satisfies the specified requirements but the overall system design is unsafe. For example, the software and hardware satisfy their specified requirements and the operators correctly implement the procedures they were taught to use.

As an example, consider the crash of a Red Wings Airlines Tupolev (Tu-204) aircraft that was landing in Moscow in 2012. A soft touchdown made runway contact a little later than usual. There was also a crosswind, which meant that the weight-on-wheels switches did not activate. Because the software did not think that the aircraft was on the ground and because it was programmed to protect against activation of the thrust reversers while in the air (which is hazardous), the command of the pilot to activate the thrust reversers was ignored by the software. At the same time, the pilots assumed that the thrust reversers would deploy as they always do, and quickly engaged high engine power to stop sooner. Instead, the pilot command accelerated the aircraft forward, eventually colliding with a highway embankment (Leveson & Thomas, 2018).

Note that nothing failed in this accident. The software satisfied its requirements and behaved exactly the way the programmers were told it should. The pilots had no way of knowing that the thrust reversers would not activate. There were no hardware failures. The software performed exactly as it was designed to do. The humans acted reasonably. In complex systems, human and technical considerations cannot be isolated.

These types of accidents are enabled by the inability of designers and operators to completely predict and understand all the potential interactions in today’s tightly |4|coupled and complex systems. That is, the error is in the overall system design and how the...

| Erscheint lt. Verlag | 12.8.2024 |

|---|---|

| Sprache | englisch |

| Themenwelt | Geisteswissenschaften ► Psychologie |

| Schlagworte | Aviation Psychology • aviation safety culture • Human factors in aviation |

| ISBN-10 | 1-61334-647-6 / 1613346476 |

| ISBN-13 | 978-1-61334-647-1 / 9781613346471 |

| Informationen gemäß Produktsicherheitsverordnung (GPSR) | |

| Haben Sie eine Frage zum Produkt? |

Größe: 12,5 MB

DRM: Digitales Wasserzeichen

Dieses eBook enthält ein digitales Wasserzeichen und ist damit für Sie personalisiert. Bei einer missbräuchlichen Weitergabe des eBooks an Dritte ist eine Rückverfolgung an die Quelle möglich.

Dateiformat: EPUB (Electronic Publication)

EPUB ist ein offener Standard für eBooks und eignet sich besonders zur Darstellung von Belletristik und Sachbüchern. Der Fließtext wird dynamisch an die Display- und Schriftgröße angepasst. Auch für mobile Lesegeräte ist EPUB daher gut geeignet.

Systemvoraussetzungen:

PC/Mac: Mit einem PC oder Mac können Sie dieses eBook lesen. Sie benötigen dafür die kostenlose Software Adobe Digital Editions.

eReader: Dieses eBook kann mit (fast) allen eBook-Readern gelesen werden. Mit dem amazon-Kindle ist es aber nicht kompatibel.

Smartphone/Tablet: Egal ob Apple oder Android, dieses eBook können Sie lesen. Sie benötigen dafür eine kostenlose App.

Geräteliste und zusätzliche Hinweise

Buying eBooks from abroad

For tax law reasons we can sell eBooks just within Germany and Switzerland. Regrettably we cannot fulfill eBook-orders from other countries.

aus dem Bereich