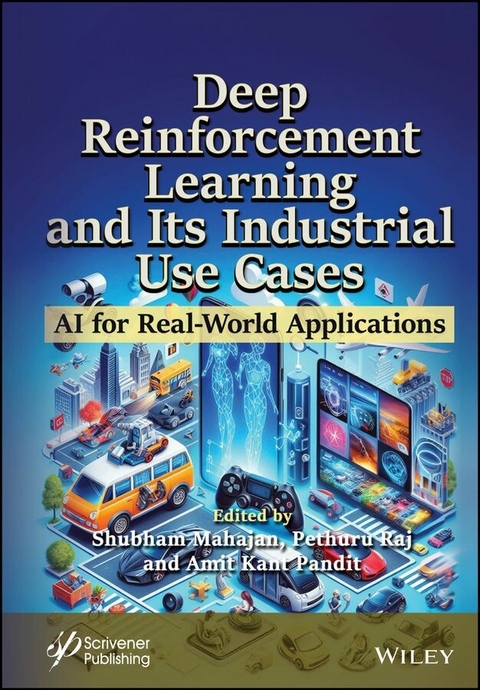

Deep Reinforcement Learning and Its Industrial Use Cases (eBook)

416 Seiten

Wiley-Scrivener (Verlag)

978-1-394-27256-3 (ISBN)

This book serves as a bridge connecting the theoretical foundations of DRL with practical, actionable insights for implementing these technologies in a variety of industrial contexts, making it a valuable resource for professionals and enthusiasts at the forefront of technological innovation.

Deep Reinforcement Learning (DRL) represents one of the most dynamic and impactful areas of research and development in the field of artificial intelligence. Bridging the gap between decision-making theory and powerful deep learning models, DRL has evolved from academic curiosity to a cornerstone technology driving innovation across numerous industries. Its core premise-enabling machines to learn optimal actions within complex environments through trial and error-has broad implications, from automating intricate decision processes to optimizing operations that were previously beyond the reach of traditional AI techniques.

'Deep Reinforcement Learning and Its Industrial Use Cases: AI for Real-World Applications' is an essential guide for anyone eager to understand the nexus between cutting-edge artificial intelligence techniques and practical industrial applications. This book not only demystifies the complex theory behind deep reinforcement learning (DRL) but also provides a clear roadmap for implementing these advanced algorithms in a variety of industries to solve real-world problems. Through a careful blend of theoretical foundations, practical insights, and diverse case studies, the book offers a comprehensive look into how DRL is revolutionizing fields such as finance, healthcare, manufacturing, and more, by optimizing decisions in dynamic and uncertain environments.

This book distills years of research and practical experience into accessible and actionable knowledge. Whether you're an AI professional seeking to expand your toolkit, a business leader aiming to leverage AI for competitive advantage, or a student or academic researching the latest in AI applications, this book provides valuable insights and guidance. Beyond just exploring the successes of DRL, it critically examines challenges, pitfalls, and ethical considerations, preparing readers to not only implement DRL solutions but to do so responsibly and effectively.

Audience

The book will be read by researchers, postgraduate students, and industry engineers in machine learning and artificial intelligence, as well as those in business and industry seeking to understand how DRL can be applied to solve complex industry-specific challenges and improve operational efficiency.

Shubham Mahajan, PhD, is an assistant professor in the School of Engineering at Ajeekya D Y Patil University, Pune, Maharashtra, India. He has eight Indian, one Australian, and one German patent to his credit in artificial intelligence and image processing. He has authored/co-authored more than 50 publications including peer-reviewed journals and conferences. His main research interests include image processing, video compression, image segmentation, fuzzy entropy, and nature-inspired computing methods with applications in optimization, data mining, machine learning, robotics, and optical communication.

Pethuru Raj, PhD, is chief architect and vice president at Reliance Jio Platforms Ltd in Bangalore, India. He has a PhD in computer science and automation from the Indian Institute of Science in Bangalore, India. His areas of interest focus on artificial intelligence, model optimization, and reliability engineering. He has published thirty research papers and edited forty-two books.

Amit Kant Pandit, PhD, is an associate professor in the School of Electronics & Communication Engineering Shri Mata Vaishno Devi University, India. He has authored/co-authored more than 60 publications including peer-reviewed journals and conferences. He has two Indian and one Australian patent to his credit in artificial intelligence and image processing. His main research interests are image processing, video compression, image segmentation, fuzzy entropy, and nature-inspired computing methods with applications in optimization.

1

Deep Reinforcement Learning Applications in Real-World Scenarios: Challenges and Opportunities

Sunilkumar Ketineni and Sheela J.*

Department of School of Computer Science and Engineering VIT-AP University, Amaravathi, Andhra Pradesh, India

Abstract

Deep reinforcement learning (DRL) has proven to be incredibly effective at resolving complicated issues in a variety of fields, from game play to robotic control. Its seamless transfer from controlled surroundings to practical applications, meanwhile, poses a variety of difficulties and chances. This paper comprehensively examines the opportunities and challenges in applying DRL in real-world settings, offering a comprehensive exploration of the challenges and opportunities within this dynamic field. It highlights the pressing issues of data scarcity and safety concerns in critical domains like autonomous driving and medical diagnostics, emphasizing the need for sample-efficient learning and risk-aware decision-making techniques. Additionally, the chapter uncovers the immense potential of DRL to transform industries, optimizing complex processes in finance, energy management, and industrial operations, leading to increased efficiency and reduced costs. This chapter serves as a valuable resource for researchers, practitioners, and decision-makers seeking insights into the evolving landscape of DRL in practical settings.

Keywords: Deep reinforcement learning, decision-making, transfer learning, meta-learning, domain adaptation

1.1 Introduction

The deep reinforcement learning (DRL) paradigm has become a potent tool for teaching agents to make successive judgments in challenging situations. Its uses can be found in a wide range of industries, including robotics, autonomous vehicles, banking, and healthcare [1]. DRL algorithm translation from controlled laboratory conditions to real-world scenarios is not without its difficulties and potential though. This chapter explores the challenging landscape of implementing DRL in real-world settings. For the purpose of addressing difficult decision-making issues across a variety of areas, deep reinforcement learning (DRL) has proven to be a powerful paradigm. The potential of DRL has been astounding, from gaming to robotics and autonomous systems.

Figure 1.1 Deep reinforcement learning agent with an updated structure.

Deep reinforcement learning (DRL) has received a lot of interest recently as a potentially effective method for handling challenging decision-making problems in a variety of contexts. DRL has a wide range of possible applications, from robots and autonomous driving to healthcare and finance. The deep reinforcement learning agent with an updated structure is displayed in Figure 1.1.

1.1.1 Problems with Real-World Implementation

- Sample effectiveness: Sample efficiency is the ability of a learning algorithm to perform well with a small number of training instances or samples. It is frequently necessary to train models on a lot of data in machine learning and reinforcement learning to achieve excellent performance [2]. However, gathering data can frequently be expensive, time-consuming, or even impractical in real-world situations. Sample effectiveness is crucial in industries like healthcare, manufacturing, and marketing, impacting quality control, market research, and product development.

- Reinforcement learning (RL): Agents study how to interact with their surroundings in real life to optimize reward signals. Less contact with the environment is needed for sample-efficient RL algorithms to develop efficient rules. In circumstances where engaging with the environment is expensive, risky, or time-consuming, this is crucial.

- Supervised learning: To make predictions or categorize data, models undergo supervised learning from labeled instances. Using fewer labeled examples, sample-efficient algorithms can perform well and eliminate the need for labor-intensive manual labeling.

- Transfer learning: Transfer learning entails developing a model for one activity or domain and then applying it to another task or domain that is related to the original. Even with little data, domain-specific transfer learning techniques can use what is learned there to enhance performance in a different domain.

- Active learning: A model actively chooses the most educational examples for labeling in a process known as active learning, which aims to enhance the model’s performance [3]. The most useful instances may be swiftly identified and labeled using sample-efficient active learning procedures, which will save time and effort overall.

- Meta-learning: Through the process of meta-learning, models are trained on a range of activities to enhance their capacity to pick up new skills fast and with little input. Since models must generalize from a limited number of examples, sample efficiency is a crucial component of effective meta-learning.

Contributions of the Book Chapter

In this chapter, we provide an in-depth exploration of the challenges and opportunities in applying deep reinforcement learning (DRL) in practical situations, offering valuable insights into how innovative techniques are addressing sample efficiency, data scarcity, and safety concerns while also highlighting the immense potential for DRL to transform industries by streamlining complex processes and enhancing decision-making across various domains.

1.2 Application to the Real World

Data may be sparse or challenging to obtain in many real-world scenarios, just like with robotics or medical applications. Due to sample-efficient algorithms, these circumstances lend themselves to the application of machine learning techniques.

1.2.1 Security and Robustness

Deep reinforcement learning (DRL) agents must be deployed in realistic situations while taking safety and robustness into account. Deep reinforcement learning includes educating agents to choose actions that will maximize a cumulative reward signal as they interact with their environment [4]. To avoid unintended effects and unanticipated actions in complex and dynamic real-world contexts, it is crucial to guarantee the safety and robustness of these agents. A summary of the main ideas and issues around safety and robustness in DRL is given below:

a) Safety: When discussing safety in DRL, it is important to note that it refers to an agent’s capacity to work within predetermined boundaries and refrain from doing any activities that might result in disastrous consequences or safety rule breaches. Safety must be ensured through the following:

- Constraint enforcement: Agents should be built to adhere to safety constraints, which are states or behaviors that an agent must not cross. This may entail punishing or refraining from behavior that violates these limitations.

- Handling uncertainty: Dynamic and unpredictable settings exist in real life. Agents should be able to manage uncertainty in their observations and make judgments that are resistant to changes in the environment.

- Learning from human feedback: Including feedback from people during training can assist agents in acquiring safe behaviors and giving priority to taking actions that are consistent with human preferences and values.

b) Robustness: In DRL, robustness refers to an agent’s capacity to function successfully in a variety of settings and circumstances, even when there is noise, disturbance, or variation. Developing robustness entails the following:

- Domain adaptation: It can be difficult to train an agent in one environment and then hope that it will transfer to another. Agents can adapt to new surroundings more successfully by using transfer learning and domain adaptation techniques. Agents may be susceptible to adversarial attacks, in which minor changes in the input can cause significant modifications in behavior. To fend off such attacks, robust agents ought to be created [5]. Real-world settings may undergo distributional alterations over time, necessitating the constant adaptation and learning of agents to new data distributions.

c) Challenges: There are various difficulties in creating reliable and secure DRL agents for real-world situations, namely:

- Sample efficiency: In the actual world, training DRL agents can take a lot of time and data. To cut down on the amount of interactions needed for learning, effective exploration tactics are needed.

- Exploration vs. exploitation: Finding safe and efficient methods depends on striking a balance between exploration (trying new activities) and exploitation (making choices based on knowledge acquired).

- Incentive engineering: It is difficult to create incentive functions that will lead agents to the desired behaviors while avoiding undesired side effects.

- A fundamental issue, especially in highly dynamic contexts, is ensuring that agents can transfer their acquired actions to novel and unanticipated situations.

- Ethics: DRL agents should follow human-defined ideals, observe ethical norms, and refrain from bias.

d) Mitigation methods:

- Researchers and professionals are looking into different mitigation measures to solve these problems, such as clearly implementing safety limitations into the agent’s learning process will guarantee that it never behaves in a dangerous manner. Imitation learning is a technique for teaching agents how to behave safely and to avoid exploring harmful situations.

- Risk-sensitive learning: This refers to...

| Erscheint lt. Verlag | 1.10.2024 |

|---|---|

| Sprache | englisch |

| Themenwelt | Mathematik / Informatik ► Informatik |

| Schlagworte | Advanced AI Techniques • AI in Manufacturing • AI Optimization Strategies • Artificial Intelligence in Finance • Artificial Intelligence in Logistics • Cutting-Edge AI Research • Deep Reinforcement Learning • DRL Challenges and Solutions • DRL Industrial Applications • DRL Technology • Future of AI in Industry • Healthcare AI Innovations • Implementing AI Solutions • Machine learning for business • Real-World AI Use Cases |

| ISBN-10 | 1-394-27256-1 / 1394272561 |

| ISBN-13 | 978-1-394-27256-3 / 9781394272563 |

| Haben Sie eine Frage zum Produkt? |

Größe: 11,3 MB

Kopierschutz: Adobe-DRM

Adobe-DRM ist ein Kopierschutz, der das eBook vor Mißbrauch schützen soll. Dabei wird das eBook bereits beim Download auf Ihre persönliche Adobe-ID autorisiert. Lesen können Sie das eBook dann nur auf den Geräten, welche ebenfalls auf Ihre Adobe-ID registriert sind.

Details zum Adobe-DRM

Dateiformat: EPUB (Electronic Publication)

EPUB ist ein offener Standard für eBooks und eignet sich besonders zur Darstellung von Belletristik und Sachbüchern. Der Fließtext wird dynamisch an die Display- und Schriftgröße angepasst. Auch für mobile Lesegeräte ist EPUB daher gut geeignet.

Systemvoraussetzungen:

PC/Mac: Mit einem PC oder Mac können Sie dieses eBook lesen. Sie benötigen eine

eReader: Dieses eBook kann mit (fast) allen eBook-Readern gelesen werden. Mit dem amazon-Kindle ist es aber nicht kompatibel.

Smartphone/Tablet: Egal ob Apple oder Android, dieses eBook können Sie lesen. Sie benötigen eine

Geräteliste und zusätzliche Hinweise

Buying eBooks from abroad

For tax law reasons we can sell eBooks just within Germany and Switzerland. Regrettably we cannot fulfill eBook-orders from other countries.

aus dem Bereich